Hello, readers! Today, we’re diving into Azure Update Manager, an essential tool for anyone looking to optimize and manage their cloud solutions. Have you heard of it, or are you already using it? We’re eager to hear your thoughts, questions, and experiences. Please leave your comments below, and let’s explore the potential of Azure Update Manager together!

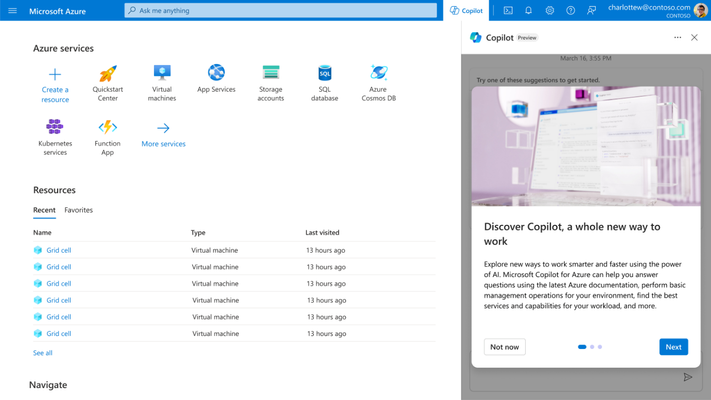

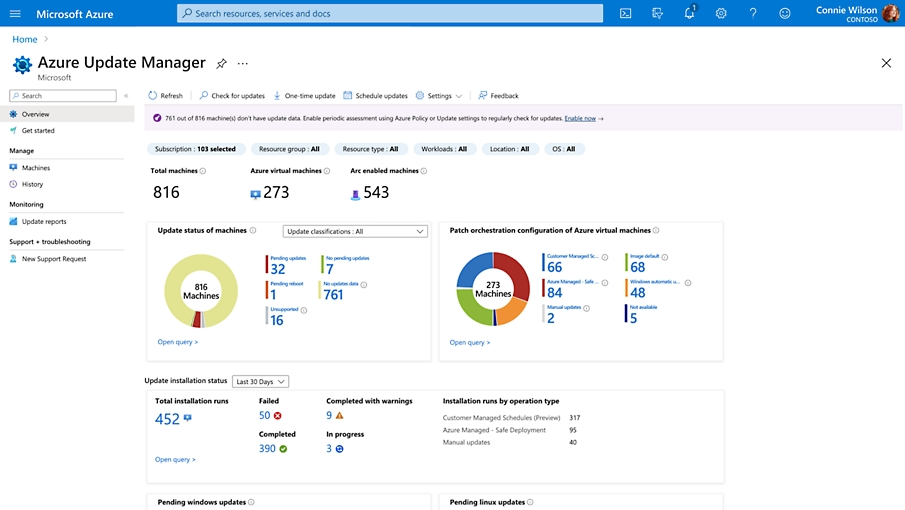

1. What is Azure Update Manager?

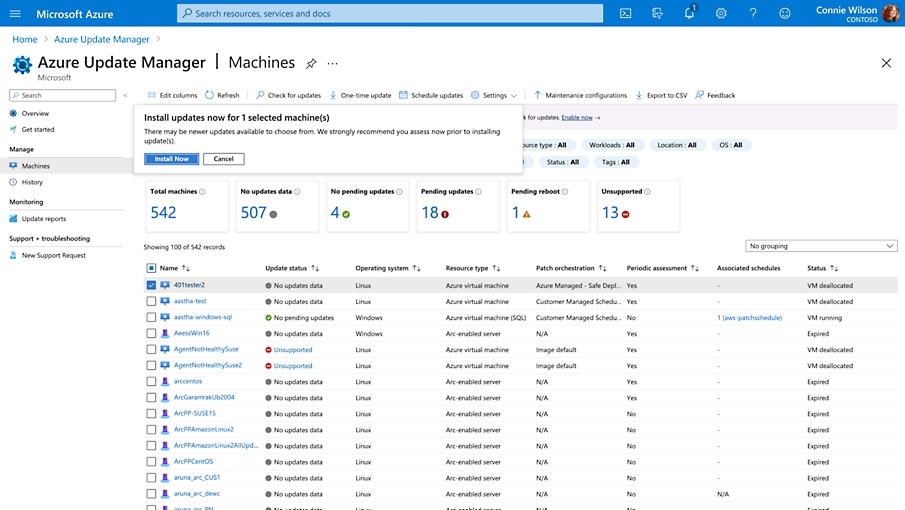

- An introduction to Azure Update Manager, explaining its primary role in managing updates for operating systems and applications on virtual machines.

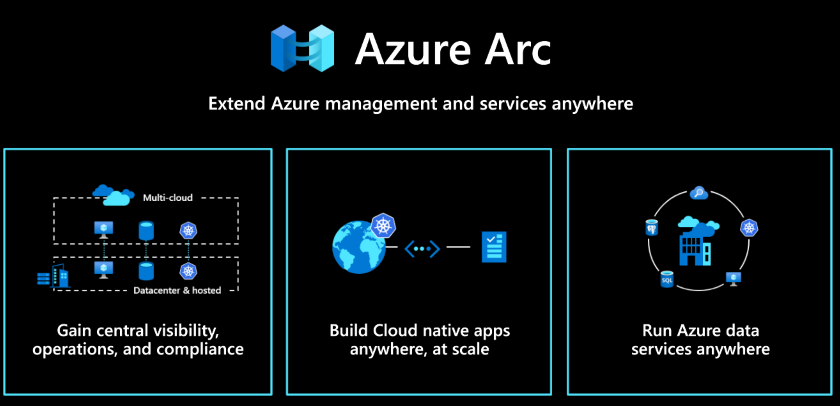

- What is Azure ARC?

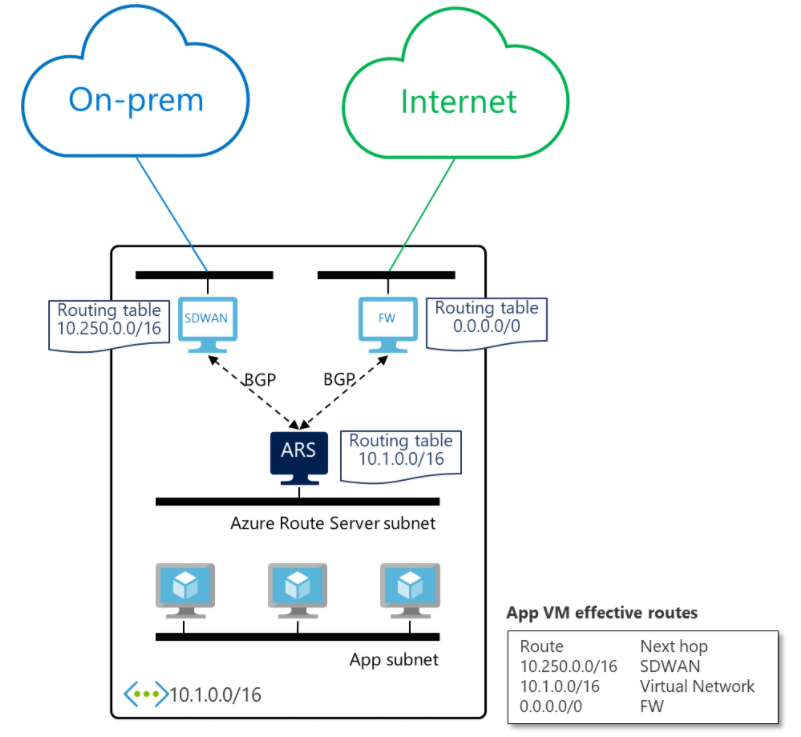

- Azure ARC is a Microsoft solution that allows you to manage resources from different cloud environments and on-premises locations directly from Azure.

- What is Azure ARC?

2. Benefits of Azure Update Manager

- Automation: How Update Manager automates the update process, reducing operational overhead.

- Compliance: The importance of keeping systems updated to meet compliance and security requirements.

- Azure ARC

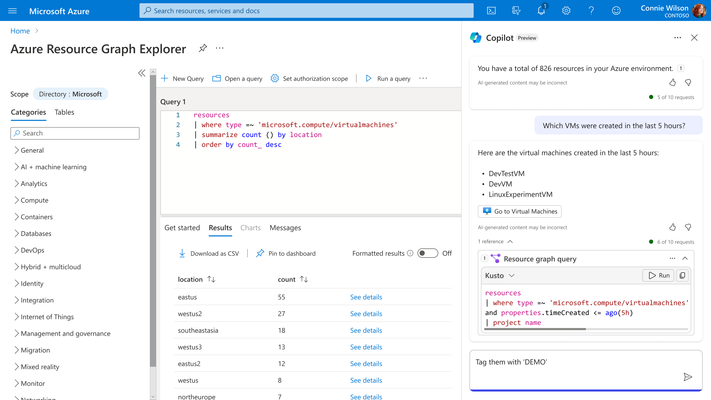

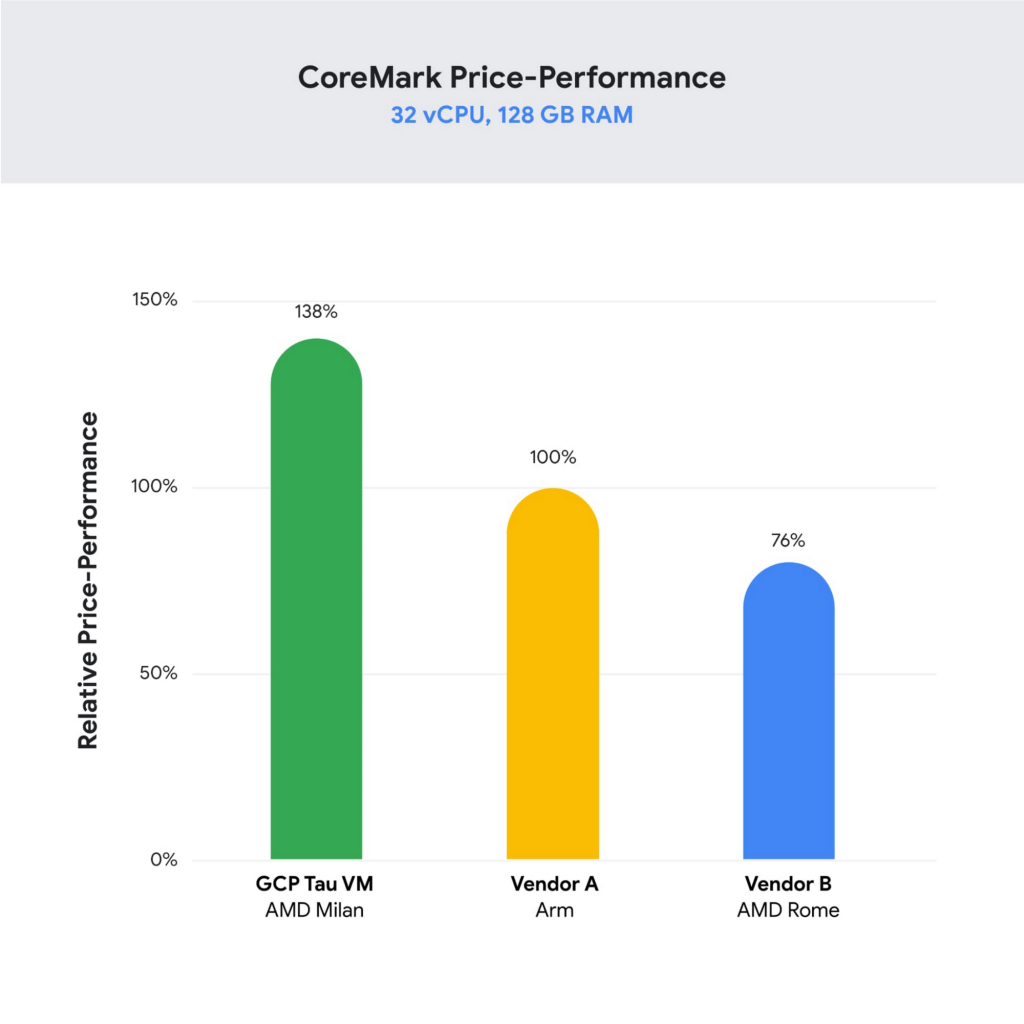

- One of the significant advantages of Azure ARC is the ability to manage updates from various cloud providers in a single dashboard. This means that regardless of where your applications and services are hosted—whether in Azure, AWS, Google Cloud, or on-premises servers—you can monitor and apply updates centrally.

- Azure ARC

3. Integration with Azure Automation

- How Azure Update Manager integrates with Azure Automation to facilitate large-scale update management.

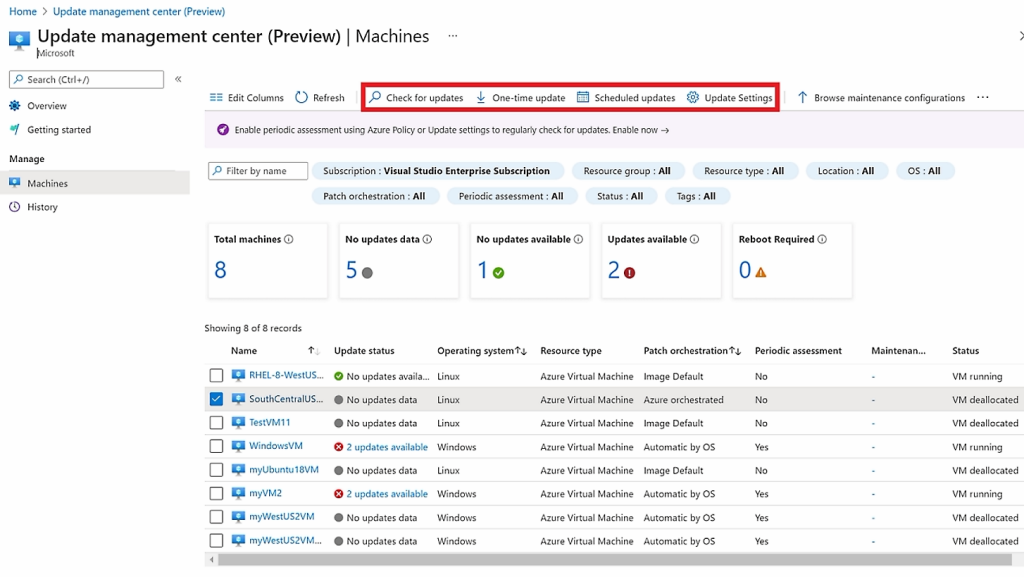

4. Configuration and Implementation

- A step-by-step guide on how to set up Azure Update Manager in an organization, including best practices.

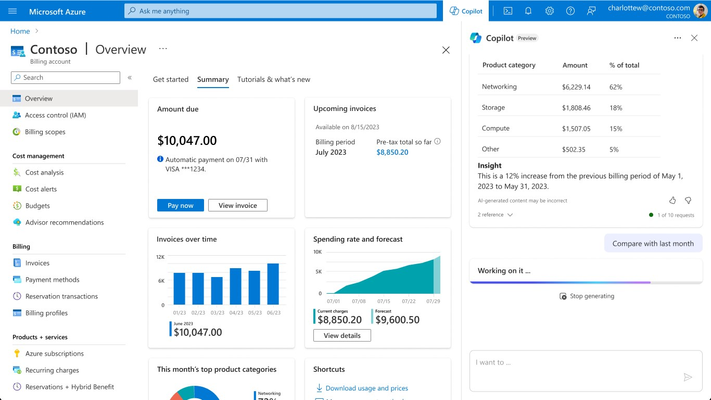

5. Reporting and Monitoring

- The importance of detailed reporting and continuous monitoring of applied updates, and how this can be achieved with Azure Update Manager.

Centralization: With Azure ARC, I don’t need to switch between different management consoles. Everything is accessible in one place, making it easier to visualize and control. This helps me a lot in my daily tasks, especially with centralized patch scheduling and defining custom windows for more efficient deployment, all in a single console

By leveraging this tool, we have greatly minimized the impact during patch windows and enhanced our visibility into all ongoing activities. We are very pleased with the results! 🚀🚀

I hope this post has shed light on the benefits of Azure Update. Have you had any experiences with it? If you haven’t started using Azure ARC yet, it’s worth considering this solution to optimize your IT infrastructure management. I’d love to hear your thoughts and questions! Please leave your comments below, and let’s continue the conversation!😉

https://azure.microsoft.com/en-us/products/azure-update-management-center

https://azure.microsoft.com/pt-br/products/azure-arc